Principal Investigator (PI): Anthony Arendt, University of Washington, Seattle, WA

Co-Investigators (Co-PIs): Joe Hamman, University Corporation for Atmospheric Research (UCAR), Boulder, CO; Dan Pilone, Element 84, Inc., Alexandria, VA

Introduction

Data-intensive scientific workflows are at a pivotal stage in which traditional local computing resources are no longer capable of meeting the storage or computing demands of scientists. In the Earth System Sciences (ESS) community, we are facing an explosion of data volumes where new datasets – sourced from models, in-situ observations, and remote sensing platforms – are being made available at prohibitively large volumes to store at even medium to large High Performance Computing (HPC) centers.

NASA estimates that by 2025 it will be using commercial cloud services (e.g., Amazon Web Services (AWS)) to store upwards of 250 Petabytes (PB) of Earth Observing System Data and Information System (EOSDIS) data. Availability of these data in cloud environments, co-located with a wide range of computing resources, will revolutionize how scientists use these datasets and provide opportunities for important scientific advancements. Fully leveraging these opportunities will require new approaches in the way the ESS community handles data access, processing, and analysis.

These technologies will be deployable on commercial cloud infrastructure where NASA EOSDIS data are anticipated to be stored. Often, tools for working with these datasets consist of convenient interfaces for discovering and downloading data (e.g., Earthdata Search) from individual EOSDIS Distributed Active Archive Centers (DAACs). The transition to cloud storage for many of these DAACs will bring immense opportunities and specific challenges to researchers.

This project is facilitating the ESS community’s transition into cloud computing with technologies that build on existing open-source tools (e.g., Python, Jupyter) by integrating building on top of the growing Pangeo ecosystem.

Read how NASA’s Earth Science Data and Information System (ESDIS) Project is preparing for user needs while moving EOSDIS data to the cloud for ease of access in EOSDIS Data in the Cloud: User Requirements.

Project Work Summary

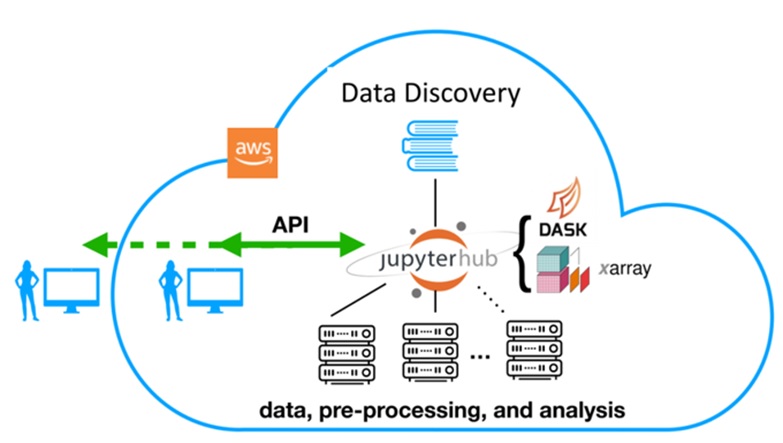

The first task was to deploy a scalable cloud-based JupyterHub on AWS for community use. JupyterHub is a multi-user, multi-language interactive computing environment that facilitates open-ended, exploratory analysis and data visualization. Content (notebooks) developed on JupyterHub are both functional and fluid in the manner of an “executable paper” combining data, processing, and interpretation, a necessary departure from traditional publication as a sequence of static artifacts. JupyterHub resources are combined with a variety of other offerings and are available through the Pangeo Cloud section of the Pangeo website.

The second task was to integrate existing NASA data discovery tools with cloud-based data access protocols. Existing data discovery tools, such as the Common Metadata Repository (CMR) and Global Imagery Browse Services (GIBS), provide convenient access to dataset metadata, but navigating the access, retrieval, and processing steps for these datasets is left to individual users. An advanced Python application programming interface (API) was developed that leverages high-level tools like xarray and Dask allowing scientists to accelerate their analysis. Integration of this API with the Pangeo ecosystem provides the API with cutting edge scientific tools for pre-processing, regridding, machine learning, and visualization.

The third task was to leverage the advanced API for data discovery and processing to provide an advanced, cloud-optimized framework for remote data retrieval. This approach to a data retrieval system goes beyond simple slice and download operations (e.g., Open-source Project for a Network Data Access Protocol (OPeNDAP)) and leverages the advanced API for data discovery, access, and processing to also provide server-side perfunctory processing.

The team demonstrated the use of these tools with several datasets including the North American Land Data Assimilation System (NLDAS), Gravity Recovery and Climate Experiment (GRACE), and Sentinel-1 synthetic aperture radar (SAR). The example applications serve as templates for the broader community and real-world applications for evaluation of the cloud services and applications we develop. The Pangeo Gallery showcases scientific use cases derived from the Pangeo infrastructure.

Results

The project has accelerated a shift in the ESS culture toward cloud computing by providing short but intensive training opportunities that enable new ways for scientists to collaborate and make full use of NASA EOSDIS datasets.

Additionally, the project has made steady progress towards the goal of facilitating the geoscience community’s transition into cloud computing by building on top of the growing Pangeo ecosystem.

Project-Related Resources

Publications and Presentations on GitHub

Learning and teaching materials

Publications (listed alphabetically)

Abernathey, R., Hamman, J., Heagy, L., Fernandes, F. & Hoese, D. (In press). “Open Source Frameworks for Earth Data Science are Blossoming.” EOS. In revision.

Eynard-Bontemps, G., Abernathey, R., Hamman, J., Ponte, A. & Rath, W. (2019). “The Pangeo Big Data Ecosystem and its use at CNES.” In Soille, P., Loekken, S. & Albani, S. (2019). Proceedings of the 2019 conference on Big Data from Space (BiDS’2019): 49-52. EUR 29660 EN, Publications Office of the European Union, Luxembourg [doi:10.2760/848593].

Hamman, J., Robinson, N. & Abernathey, R. (2019). “Science needs to rethink how it interacts with big data.” Nature (in revision).

Presentations (listed alphabetically)

Abernathey R., Hamman, J. & Miles, A. (2018). “Beyond netCDF: Cloud Native Climate Data with Zarr and Xarray.” Abstract IN33A-06. 2018 American Geophysical Union (AGU) Fall Meeting, Washington, D.C.

Arendt, A., Hamman, J., Rocklin, M., Tan, A., Fatland, D.R., Joughin, J., Gutmann, E.D., Setiawan, L. & Henderson, S. (2018). “Pangeo: Community tools for analysis of Earth Science Data in the Cloud.” Abstract IN54A-05. 2018 AGU Fall Meeting, Washington, D.C.

Hamman, J. (2018). “The Pangeo ecosystem for data proximate analytics.” 2018 Workshop on developing Python frameworks for earth system sciences. European Centre for Medium-Range Weather Forecasts (ECMWF), Reading, UK.

Hamman, J. & Abernathey, R. (2019). “Handle ‘Big’ Larger-than-memory Data.” Clinic. 2019 Oceanhackweek, Seattle, WA.

Hamman, J., Arendt, A., Pilone, D., Henderson, S., Fatland, R., Tan, A. & Pawloski, A. (2019). “Community tools for analysis of NASA Earth Observing System Data in the Cloud.” Poster. 2019 NASA Earth Science Data System Working Group (ESDSWG) Meeting, Annapolis, MD.

Hamman, J. & Banihirwe, A. (2019). “Pangeo: Scalable Geoscience Tools in Python – Xarray, Dask, and Jupyter.” Clinic. 2019 Community Surface Dynamics Modeling System (CSDMS) Meeting, Boulder, CO.

Hamman, J., Abernathey, R., & Henderson S. (2018). “Pangeo: Scalable Geoscience Tools in Python – Xarray, Dask, and Jupyter.” Abstract WS22. 2018 AGU Fall Meeting, Washington, D.C.

Hamman, J., Abernathey, R., Holdgraph, C., Panda, Y. & Rocklin, M. (2018). “Pangeo and Binder: Scalable, shareable and reproducible scientific computing environments for the geosciences.” Abstract IN53A-03. 2018 AGU Fall Meeting, Washington, D.C.

Hamman, J. & Rocklin, M. (2018). “Pangeo: A community-driven effort for Big Data geoscience.” Seminar. UK Met Office, Exeter, UK.

Hanson, M. (2019). “How Open Communities are Revolutionizing Science.” Keynote. FOSS4G 2019, Bucharest, Romania.

Henderson, S., Arendt, A., Tan, A. & Pawlowski, A. (2019). “Using Pangeo JupyterHubs to work with large public datasets.” Workshop. 2019 Earth Science Information Partners (ESIP) Summer Meeting, Tacoma, WA.

Henderson, S.T. (2019). “Benefits of InSAR archives on the Cloud for volcano monitoring.” Cascades Volcano Observatory NISAR CVO Workshop.

— (2019). “Cloud Native Analysis of Earth Observation Satellite Data with Pangeo.” ESIP Tech Dive Webinar.

— (2019). “Moving satellite radar processing and analysis to the Cloud.” UNAVCO ISCE Short Course.

— (2019). “Pangeo: An open community platform for big data geoscience analysis and visualization.” Woods Hole Oceanographic Institute.

— (2019). “Pangeo: Community tools for analysis of Earth Science Data in the Cloud.” Cascadia Chapter (CUGOS) of the Open Source Geospatial Foundation (OSGeo).

— (2019). “Scalable, data-proximate cloud computing for Earth Science research.” Moderated session. ESIP Summer Meeting, Tacoma, WA.

— (2019). “Synthesizing open-source software for scalable cloud computing with NASA imagery.” FOSS4G-NA Conference, San Diego, CA.

— (2018). “Regional interferometric synthetic aperture radar (InSAR) snowpack measurements.” Boise State Geosciences Department Seminar, Boise, ID.

— (2018). “Scalable InSAR processing and analysis in the Cloud with applications to geohazard monitoring in the Pacific Northwest.” Abstract G41B-0683. 2018 AGU Fall Meeting, Washington, D.C.

— (2018). “Towards automated Sentinel-1 satellite radar imagery classification for hazard monitoring.” University of Washington Seismolunch seminar, Seattle, WA.

Workshops

2019 Pangeo Community Meeting: Organized and hosted by team members Arendt, Hamman, Henderson, Tan, and Fatland.

2019 ESDSWG: Attended by team members Arendt and Hamman.

2019 UW Hackweeks (Ocean, Geo): Organized by team members Arendt, Tan, and Henderson and incorporating elements from this project.