“Not bad for a shoebox.”

This quip, uttered by an engineer at NASA’s Wallops Island, Virginia, Near Earth Network (NEN) receiving station on Friday, March 22, 2019, is something NASA Oceanographer Gene Carl Feldman will never forget.

The comment came in response to the successful downlink and processing of the first image from the HawkEye imager aboard the University of North Carolina-Wilmington’s (UNCW) SeaHawk CubeSat, currently in low Earth orbit approximately 575 kilometers above the surface.

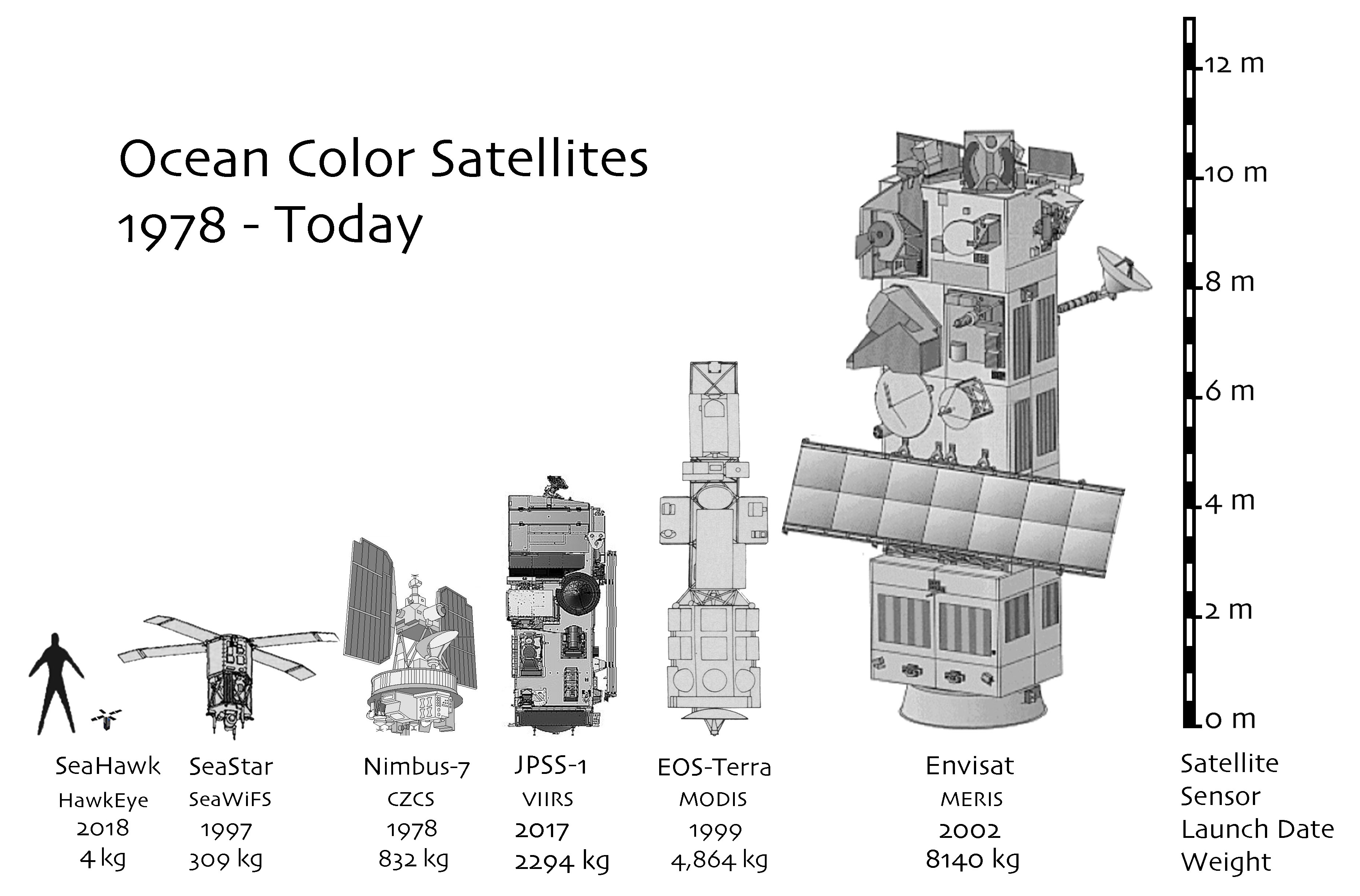

The goal of the SeaHawk mission was to prove a concept—that it is possible to collect scientifically credible ocean color data comparable to that of previous ocean color satellite missions from a 3U (or unit) CubeSat, a small, cube-shaped satellite (also known as a nanosatellite) measuring just 10-centimeters x 10-centimeters x 30-centimeters — and the successful receipt of the first image proved it was.

“The mission could have ended at that moment, and we could have declared 100 percent success,” said Feldman, who specializes in ocean color remote sensing. “This was the first X-band downlink from a CubeSat that NASA had ever done. The data came down, it was processed flawlessly through the system — it was amazing! Everything worked. Here you have this 11-meter dish collecting data from something you can hold in one hand.”

The mission could have ended at that time, but, of course, it didn’t. Although pursued as a proof-of-concept, Feldman admits he had bigger plans for SeaHawk from the start.

“I didn’t think it would be worth NASA’s investment to do a one-off, get one image, prove the concept, and go home,” he said. “My goal from the beginning was to integrate this mission into the infrastructure that we have built over the past 25 years to support ocean color satellites, and to demonstrate that a CubeSat can be treated like a normal, credible scientific mission.”

Feldman also intended the mission to be of service to ocean color researchers worldwide.

“We wanted this mission to be part of the global ocean color community, so that people could not only see that the data are useful, but actually schedule the spacecraft and have it take images where they want,” he said.

Feldman and the other members of the SeaHawk/HawkEye mission have accomplished these objectives as well, but their success didn’t happen overnight. After two and a half years of commissioning that Feldman described as, “at times quite challenging,” SeaHawk entered its phase of routine operations on Monday, 21 June 2021.

SeaHawk’s Origin

SeaHawk’s origin story begins approximately ten years ago, following the unexpected loss of the Sea-viewing Wide Field-of-view Sensor (SeaWiFS) instrument, an 8-band ocean color scanner, aboard Orbital Science’s SeaStar satellite. SeaWiFS is celebrated for being the first to give scientists a complete measure of the planet’s land- and water surface-based biological life from a single instrument and it went on for 13 years—8 years longer than initially forecast.

“After SeaWiFS died, John [Morrison, professor at UNCW’s Center for Marine Science] and I were talking and we wondered if we could do something that builds on the SeaWiFS legacy, but that doesn’t cost millions of dollars and can prove a concept — that you can do small, inexpensive, high-resolution, high-quality ocean color from space,” Feldman said. “That was the goal: Can we do this?”

To ensure they could, Feldman and Morrison, who had both worked on the SeaWiFS mission, began assembling a team of experienced professionals from the academic, private, and public sectors who had significant expertise in designing and building satellite instruments, manufacturing satellite busses and components, and operating satellite missions.

The team began to take shape In October 2012, while Feldman was attending the Ocean Optics XXI conference in Glasgow, Scotland. While abroad, he arranged a meeting with Craig Clark, the founder of AAC Clyde Space, a company that builds nanosatellite spacecraft and spacecraft subsystems, and provides mission services, to discuss the prospect of working together on an ocean color CubeSat mission. After touring the company’s facility and meeting the staff, he thought the company would be a good fit, and both parties agreed to collaborate.

As for the HawkEye instrument’s development, it was a Friday when Feldman and Morrison asked Alan Holmes, President of Cloudland Instruments and systems engineer for the SeaWiFS instrument, if he’d be interested in designing an ocean color instrument with similar bands for a CubeSat. Holmes was interested, and he appreciated the opportunity to build an instrument based on his own designs, even if Clyde Space’s plans for the SeaHawk bus didn’t give him much space to work with.

“The Clyde Space concept was four inches on a side, so I went out and got the measurements and said, ‘Okay, I’ve got 100 millimeters on each side,’ and we came up with a concept that would work,” he said. “Then Gene and John said, ‘Don’t forget they have to put a skin over it and there’s a solar panel and there’s a skin under that. The bottom line is now you’ve only got 92 millimeters on a side.’ [It] didn’t fit anymore, so I had to do another design to squeeze it in a little more. The farther along we went the smaller it got, so there was a bit of a challenge there.”

Despite the challenge, Holmes came back with a design for an instrument that fit the bus by the following Monday.

With Clyde Space aboard and an instrument design from Holmes, Feldman and Morrison then went in search of funding. After submitting proposals to the Office of Naval Research and NASA’s Instrument Incubator Program that were ultimately rejected, Feldman learned from a former colleague that the nonprofit Moore Foundation was looking to fund innovative programs with a biological focus while attending an ocean color research meeting in Washington, DC. Six months later, Feldman and Morrison submitted a formal proposal to the foundation for a launch-ready CubeSat with an ocean color instrument. The foundation accepted their proposal and, because of the inherent risk associated with working in space, the foundation suggested Feldman and Morrison build two spacecraft, two instruments, and then doubled their budget.

In 2017, after the SeaHawk CubeSats and Hawkeye instruments had been built, and the team members were optimistic about it functioning as planned, Feldman began working with the Earth Sciences Division at NASA Headquarters on a Space Act Agreement between the agency and UNCW. The agreement stated that UNCW, the owner of the mission, would give NASA all rights to the HawkEye data. In return, NASA agreed to formally participate in the mission, assist with the instrument calibration and validation, and manage the collection, archiving, and distribution of all mission data.

NASA participation in data collection was critical, said Feldman, as the mission required departure from the use of S-band communication frequencies.

“Early on we realized we were going to get too much data to bring down with the typical S-band transmitter that are on most CubeSats, so we had to go to X-band, which was brand new at the time,” said Feldman. “NASA had no experience working with X-band CubeSats at the time, but it agreed to let us use the NASA NEN ground stations in Wallops and Alaska to bring the data down. They did a compatibility test with our transmitter. Two Scottish engineers came over to Goddard. They brought over their transmitter and spacecraft simulator, and we went through the full compatibility testing, as every other NASA mission must do, to verify that NASA’s NEN would be able to support the mission. And it worked.”

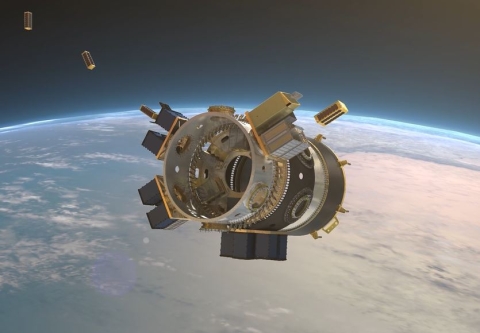

After waiting several months for a launch opportunity, and after the flight-ready spacecraft travelled in overhead compartment on a flight from Scotland to Seattle for integration into the Spaceflight SSO-A SmallSat Express module, SeaHawk travelled into space from Vandenberg Air Force Base, located in California, on December 3, 2018, aboard the SpaceX rocket propelled by a Falcon IX booster. SeaHawk-1 (the first of the two SeaHawk CubeSats constructed) with the HawkEye-1 Ocean Color Sensor aboard, was one of 64 satellites on the rideshare mission. SeaHawk-2 was built as a back-up in case there were problems with SeaHawk-1. Now the team members use it in tests before implementing any modifications to the on orbit SeaHawk-1.

Launching SeaHawk with more than 60 other satellites presented an unanticipated challenge, said Feldman, as the first step toward commissioning was obtaining SeaHawk’s beacon at the ground station in Glasgow.

“You had 64 spacecraft getting dumped out of a rocket one after another, creating a giant cloud of little dots, and the question was, ‘Which one of these little dots is ours?’” he said. “In the pod [SeaHawk was] in, there were four other CubeSats of same size, in the exact same orbit, launched roughly at the same time, and then there was a whole bunch of other ones. It took time for them to begin to separate.”

But separate they did, and the ground station was able to hear the beacon and communicate with SeaHawk within a few hours.

Yet, this wasn’t the only challenge the team faced during SeaHawk’s commissioning phase.

“The biggest problem we had was the stability of the satellite. To get good resolution imagery, the satellite must be held steady. As it turns out, that's not easy to attain,” said Morrison. “That is why we had such a long delay between launch and when we reached operations.”

In addition to the stability problems, two solar panels on the sides of the spacecraft, which were supposed to open automatically after launch, failed to deploy. It was later learned that the GPS was also nonfunctional.

“We spent December through March investigating why the two side solar panels failed to deploy and trying to get [Clyde Space] to pop the top and bottom panels, but they were being very cautious,” said Feldman. “By the middle of March, I said, ‘If we can’t pop the top and bottom panels, then we don’t have a mission, because the bottom panel covers the instrument, so you’ve got the lens cap on. If you can’t open that up, game over.’ The top panel was necessary too because that’s where the GPS was. So, I said, ‘We’ve got nothing to lose, let’s try to do this.’”

Feldman was right. To demonstrate that their proof of concept could work, the SeaHawk team needed to take an image, get the image down, and see whether it was credible, and they couldn’t do that if the panels didn’t open. Fortunately, the situation began to improve in mid-March.

“On Wednesday 20th March, the lower solar panel that covered the Hawkeye instrument was successfully deployed exposing the optics of the instrument for the first time since it was sealed in its travel pod back in Glasgow, Scotland, in late September,” Feldman wrote in a message posted to the Earthdata Forum. “Then, on Thursday morning [March 21], a schedule was uploaded to the spacecraft that commanded HawkEye to take its first Earth image as it flew southward over the coast of California.”

The team received confirmation later same day from Clyde Space that SeaHawk had indeed taken an image and that the data was stored aboard, ready for downlink. Feldman’s email continues:

“The next day, Friday morning at 11:35am, as SeaHawk flew over NASA's NEN receiving station at Wallops Island, Virginia, the spacecraft's X-band transmitter was turned on as it reached an elevation of 20 degrees above the horizon and, broadcasting at a rate of 50 million samples per second [from its] tiny antenna, transmitted its data to one of Wallops’s 11-meter antennas.”

Since then, the SeaHawk CubeSat has completed its on-orbit commissioning phase and, as of June 21, is now operational.

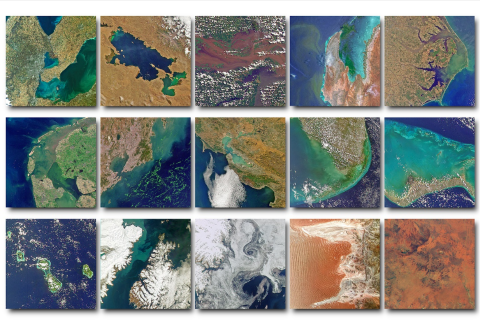

“We’re collecting about 100 new images of the Earth each week that are downlinked at either Wallops or Alaska,” Feldman said. “Within an hour of the data coming down, the data are processed and available on our web browser for anybody in the world to get for free. There is no other CubeSat mission that I can think of that is being treated like an operational space mission.”

“We built the system so that from day one, SeaWiFS data would be available on the web for everybody to access,” he said. “That was unheard of back then. Our very first image from SeaWiFS back in September 1997 was up on the web five minutes after the data came down. That was the model we followed for this mission. No proprietary-ness, no exclusive rights, everybody gets it at the same time.”

When SeaHawk went operational on June 21, all the web services on NASA’s Ocean Color website came online, all the HawkEye datasets became available to users, all the software and tools available to analyze and manipulate HawkEye data were validated and tested.

Yet, beyond merely picking up where SeaWiFS left off, Feldman and his colleagues are hopeful that the high-resolution imagery available from the HawkEye instrument will support new discoveries in ocean color research.

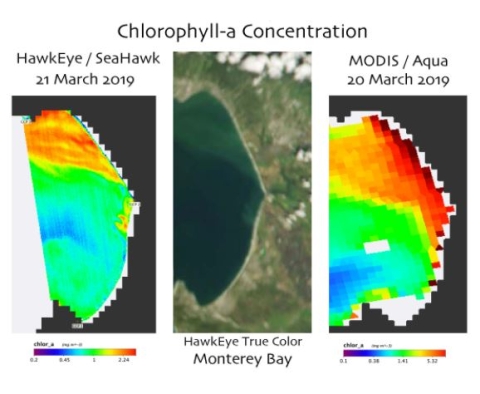

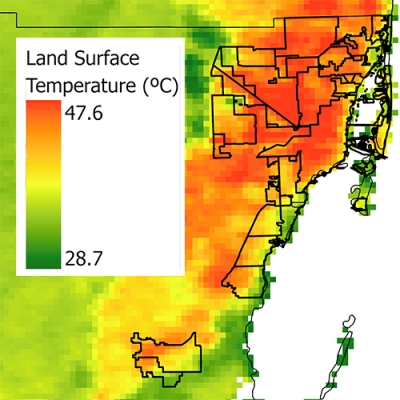

“Imagine you’ve got a 1-kilometer (km) Moderate Resolution Imaging Spectroradiometer (MODIS) pixel,” said Feldman. “Within that 1 km pixel you’ve got approximately 100 100-meter pixels so, in terms of information content, there’s a factor of nearly 100 times more information in 1 km of Hawkeye data than there is in a 1-km MODIS pixel, so there’s a lot more information.”

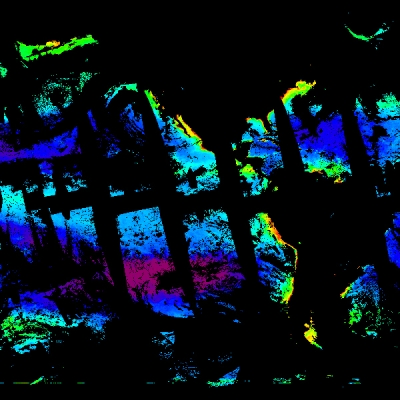

With its eight spectral bands (the same number as SeaWiFS), the HawkEye Instrument captures images of the ocean and Earth with 120-meter-per-pixel resolution. Each image has dimensions of 1800 x 6000 pixels (the extent of the imaged ground swath imaged is 216 x 720 km, or 134 x 448 miles). The instrument’s design features four linear array Charge Coupled Devices (CCDs), each of which contains 3 rows of highly sensitive photon detectors that scan the field-of-view as the satellite passes over the Earth’s surface. In addition, the instrument is designed so that it will not saturate the colors of land or clouds, which enables it to better differentiate detail along coastlines and cloud edges.

There’s a phenomenon called bright target recovery where, when you’re scanning near the edge of a coast, the signal levels off the land are bright and they tend to cause the electronics to corrupt the nearby pixels out over the ocean, and most of the ocean color instruments have trouble getting in close to the coastline,” said Holmes. “So, there was a need for something with higher resolution that could potentially work closer to the shore.”

Such advances in optics are significant, said UNCW’s John Morrison.

“Unlike MODIS and other instruments, which can saturate over land because the reflectivity of the ocean is about five percent of what it is from the land, [HawkEye] does not,” he said. “So, for the first time you can look at imagery across the boundary. Hopefully, people will be able to use this imagery in experiments investigating carbon flux from the land across the coastal zone into the open ocean. No one has ever been able to do that.”

The other noteworthy attribute of SeaHawk that promotes better imagery is that it tilts.

“We don’t tilt the instrument, the whole damn spacecraft tilts plus or minus 20 degrees to avoid sun glint,” said Feldman. “Right now, it’s the only ocean color instrument that is tilting.”

“It’s not well known, but MODIS doesn’t tilt,” said Holmes. “It looks straight down all the time and sun glint is actually a really big problem. [It’s] a chore to take it out of the data. From a data-processing point of view, when you get a scene that’s just cluttered with bright puffy clouds, and you’re trying to look at the dark ocean in between to do your signal processing and take out the sun glint, it makes it difficult.”

When taken together, all of these attributes result in imagery that has some advantages and provides a valuable complement to the data from legacy instruments such as MODIS and the Visible Infrared Imaging Radiometer Suite (VIIRS).

“We did a derived chlorophyll image from the very first image of Monterey Bay and compared it to a coincident MODIS image,” Feldman said. “You can see the same patterns, but what you also saw in the HawkEye image was an incredible level of subpixel variability that we’ve never seen before.”

Not surprisingly, the SeaHawk Project Team is keen to share this high-resolution imagery with the global ocean color community.

“This isn’t a NASA mission, it isn’t a UNC-Wilmington mission, it isn’t a Moore Foundation mission—this is the international research community’s mission to use and experiment with,” said Feldman.

To help them do that, on July 21, the SeaHawk Project Team began accepting imagery acquisition requests from the ocean color research community via the UNCW’s Sustained Ocean Color Observation from Nanosatellites (SOCON) website.

Requests are evaluated and managed by a team at University of Georgia’s Skidaway Institute of Oceanography led by Sara Rivero-Calle, Assistant Professor of Oceanography and SeaHawk Project Science Lead. They then make the requests available to Feldman’s group at the NASA Goddard Space Flight Center who combine it with requests from NASA priority programs and compile a schedule. The schedule is then sent to Clyde Space who communicate with and direct SeaHawk on a weekly basis.

In addition to advancing ocean color research, Feldman and his colleagues also hope SeaHawk can be a model for using CubeSats to obtain remotely sensed data from space in a low-cost way that complements legacy programs.

“SeaHawk is proof that CubeSat technology is a relatively inexpensive and fast method for obtaining high-quality ocean color data,” said Rivero-Calle. “We now know that CubeSats are an excellent way to support larger missions (such as NASA’s Plankton, Aerosol, Cloud and ocean Ecosystem (PACE) and Geosynchronous Littoral Imaging and Monitoring Radiometer (GLIMR) missions) with complementary observations, ensure continuity of observations, provide support between missions, offer low-cost access to space, and answer oceanography questions.”

Holmes agrees and, for him, the take-home message of the mission is that high-quality ocean color imagery from a CubeSat is possible.

“It works! It was a low-cost instrument that weighed only two pounds, fits in a four-inch cube, and can return a good image,” he said. “You can do good ocean color with it.”

In fact, the success of the SeaHawk mission has Holmes thinking about CubeSat constellations.

“The world is a cloudy place. About 85 percent of the images are wiped out, but you never know which ones you’re going to get. “You have to schedule the observations in advance—it’s a crapshoot, particularly when you’re working in the northern or southern parts of the world,” he said. “One motivation for having a lot of small satellites is, if you do have partial cloud cover, the clouds are moving so you can potentially build up and entire scene and you’ll be more likely to see the surface.”

Feldman can imagine constellations of CubeSats too, and for more than just ocean color.

“My hope is that some space agency or some entrepreneur will see an advantage in this and design a constellation of instruments,” said Feldman. “By doing this as an operational mission and showing how it can seamlessly fit into an existing science program, it could evolve into something else down the road.”

Rivero-Calle agrees and says the technology’s flexibility makes it well suited to collecting data that can address other scientific issues.

“One CubeSat by itself is not enough for all the ocean color research questions, for that we would need a constellation,” she said. “Knowing that this works means that we can now design other missions using this technology but with the necessary modifications to answer new and more complex questions. We can change the orbit, the sensor, the power, the antennae, the size, location of ground stations, and so on depending on the science question that we want to answer, but the concept of the mission has been more than successfully proven.”

Not bad for a shoebox, indeed.

Accessing HawkEye Data and Tools

Those interested in exploring and downloading HawkEye data can do so via the HawkEye web page on NASA's Ocean Color website website, or NASA's Ocean Biology Distributed Active Archive Center (OB.DAAC). On the Ocean Color website, users will find a wealth of information about the instrument and the SeaHawk mission, links to direct data access and Level-1 and Level-2 scene browsers, and supporting documentation about the mission. All data are available for download in NetCDF format.

The Level-1 and Level-2 scene browsers allow users to search for imagery by date range or location. When searching by location, users have the option to click on a map, entering latitude and longitude coordinates, or select a location from a list of locations and regions. Once a location is selected, users will receive information about swaths and the number of available images. When users click on an image, they will be provided with a true color and derived chlorophyll browse images of the scene and given options for downloading level-1 and level-2 data. Users can also get all the images for a particular location by clicking the “order data” button. When searching for imagery by date, users can select a year, month, and date, and then select from the available location highlighted on the map.

The browsers also allow users to browse the imagery from other sensors as well as HawkEye by selecting the instruments of interest for a particular area or date. The browsers enable users to compare imagery between instruments and download data and imagery from multiple sensors for a particular location, all from one online tool.

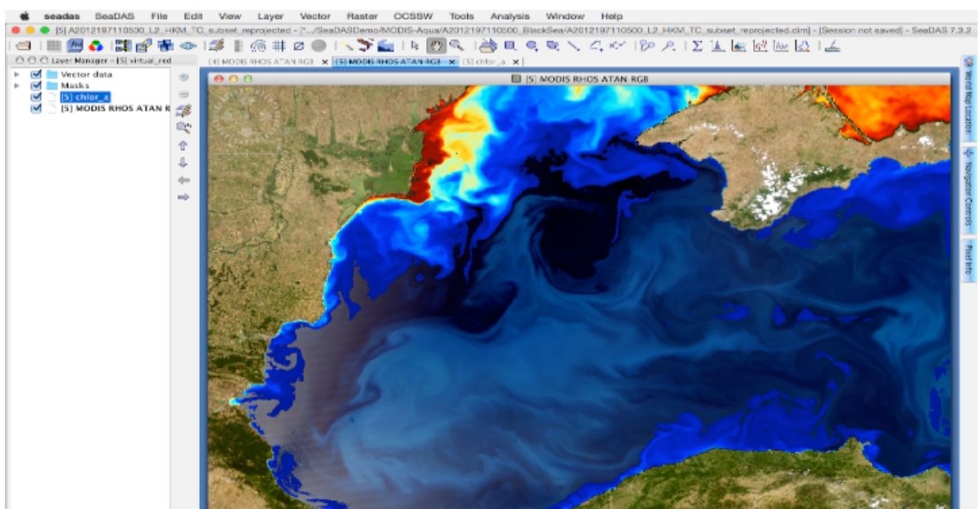

In addition to using the browsers, users can download the eighth and latest version of SeaDAS, NASA's Ocean Biology Processing Group’s (OBPG) award-winning, free software package for the processing, display, analysis, and quality control of ocean color data. SeaDAS gives users the ability to analyze, display, and process data from all the ocean color missions that the OBPG supports, including HawkEye.

“We’ve always believed that the scientific process means you should be able to reproduce somebody else’s results,” said Feldman. “In this day and age, that means being able to reproduce the products someone else produces to see if we’re doing it right."

SeaDAS provides access to the data processing source code, which allows users to modify existing algorithms, or develop and test new algorithms. In doing so, it supports users’ ability to do their own science, and share their discoveries with the entire ocean color community.

“We have benefitted greatly through the community’s input by giving out SeaDAS with the source code,” said Feldman. “It’s a way for people to find errors that we might be making or allow them to develop a new algorithm and then give us the code, which we can then integrate into the next version of SeaDAS. It’s been very effective.”