It is with great honor that I acknowledge my nomination to deliver the Leptoukh Lecture at AGU 2023, a recognition that holds special significance for me. My early career was marked by the privilege of working alongside Greg Leptoukh and our discussions on the value of semantic metadata. This essay was originally intended as my presentation for the Leptoukh Lecture. Unfortunately, due to an unforeseen medical emergency within my family, my participation in AGU 2023, whether in person or virtually, is not possible. However, I have adapted my lecture into an essay format to share with the Informatics community.

This essay is structured into four distinct sections. The first section is my heartfelt acknowledgment to the many individuals who have supported and influenced me throughout my career. The second section delves into my personal journey within the field of informatics, highlighting the valuable lessons and experiences I've gathered over the years. In the third section, I address a central challenge that has consistently emerged in my work—the issue of scale in science, particularly within Earth Science. This perpetual problem has been a focus of my research and development. The final section shifts to present and future perspectives, discussing how the integration of Artificial Intelligence into informatics is a potential current solution to the scalability challenges we face in our field.

Acknowledgements

I would like to start with some acknowledgments, beginning with a tribute to my parents. While the path of destiny is ours to choose, our beginnings are not within our control. I consider myself fortunate to have been born to supportive parents who fostered a learning environment free of constraints and the fear of failure. This nurturing has been instrumental in shaping my journey.

Any work in informatics and data science is a collaborative endeavor, and this fact cannot be overstated. It is a team sport where any individual brilliance is amplified by collective effort. I would like to express my sincere gratitude to all my colleagues, collaborators, and students over the years who have worked with me. Their contributions, insights, and teamwork have been key in achieving our shared goals. I would also like to thank all the program managers from institutions like NASA, the National Science Foundation (NSF), and the U.S. Department of Energy (DOE). Their support and faith in my projects have been crucial.

My informatics journey focused on transforming petabytes to insights is not just a personal journey but a collective endeavor supported by many remarkable individuals, and I thank them all for their support.

My Path in the World of Informatics

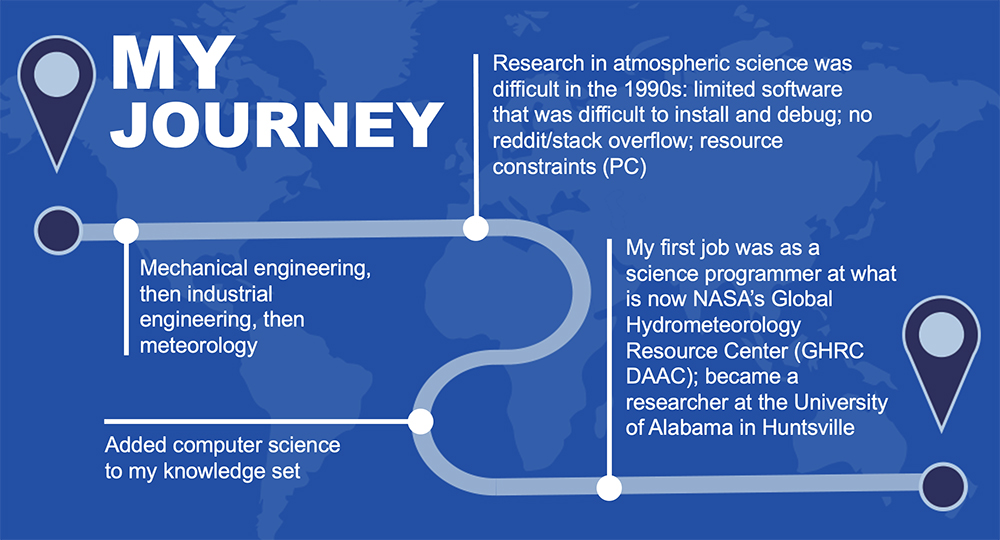

My journey into the world of informatics has been a convoluted, yet enriching experience. It began in the field of mechanical engineering, where my initial interests veered towards industrial engineering. This interest was further fueled during my internship at a Toyota factory, where I was involved in building light commercial vehicles (LCV). However, a pivotal shift occurred when I switched to meteorology, which, to me, strikingly resembled engineering fluid dynamics.

My pathway through research in atmospheric science, particularly in the 1990s, was fraught with challenges. The era was marked by limited scientific analysis software availability and software that was often difficult to install and debug. The absence of online communities like Reddit or Stack Overflow meant that troubleshooting and learning were predominantly solitary endeavors. These hurdles necessitated learning to operate within the constraints of available resources, especially when handling complex tasks like loading dual-Doppler radar data on personal computers.

This need for adaptation led me to delve deeper into computer science, where I acquired knowledge in algorithms, data structures, and pattern recognition, among other areas. This foundational understanding in computer science paved the way for my first job as a science programmer at what is now known as the Global Hydrometeorology Resource Center (GHRC), which is a NASA Distributed Active Archive Center (DAAC). Subsequently, my career progressed as I took up a researcher role at the University of Alabama in Huntsville (UAH), further shaping my path in this ever-evolving field.

Throughout my career, I have been fortunate to engage in various stages of the data lifecycle, working on large-scale platforms and systems, as well as developing sophisticated algorithms and tools for analysis. My involvement has spanned multiple levels, from the details of component-level operations to the broader perspective of system-level integration. I have taken on diverse roles such as principal investigator (PI), Co-PI, collaborator, and team member, each providing me unique insights and learning experiences.

Focusing on the data lifecycle, one of my key projects involved the Earth Science Markup Language, which leveraged XML to describe content metadata, structural metadata, and semantic metadata using ontologies to tackle the problem of the proliferation of heterogeneous data formats. I contributed to extending Rob Raskin’s Semantic Web for Earth and Environmental Terminology (SWEET) ontology and collaborated in the ESIP Semantic Web Cluster with notable figures like Rob Raskin, Greg Leptoukh, and Peter Fox. I designed NOESIS, a semantic search engine, which featured an ontology-based inference service that maps user queries to underlying catalogs and databases based on semantics. Another significant project was the Data Album, an ontology-based data and information curation tool centered around different atmospheric phenomena. My recent endeavors explore the automation in data governance and management, leading to the development of a modern data governance framework (mDGF).

Turning to analysis and data platforms/systems, I have led many projects in data mining and pattern recognition. The ADaM project stands out, focusing on data mining applications in science, complemented by data mining web services. I have also been involved in developing workflow platforms like TALKOOT. On the cyber infrastructure front, LEAD, based on pre-Cloud GRID technology, and BioEnergyKDF are my notable contributions. My recent applications include developing the concept for the Hurricane Portal and a similarity image search for satellite imagery developed for the FDL Challenge, underscoring my ongoing commitment to advancing the field of data science and informatics.

Reflecting on my journey through the realms of data science and informatics, three key lessons stand out, each derived from my diverse experiences.

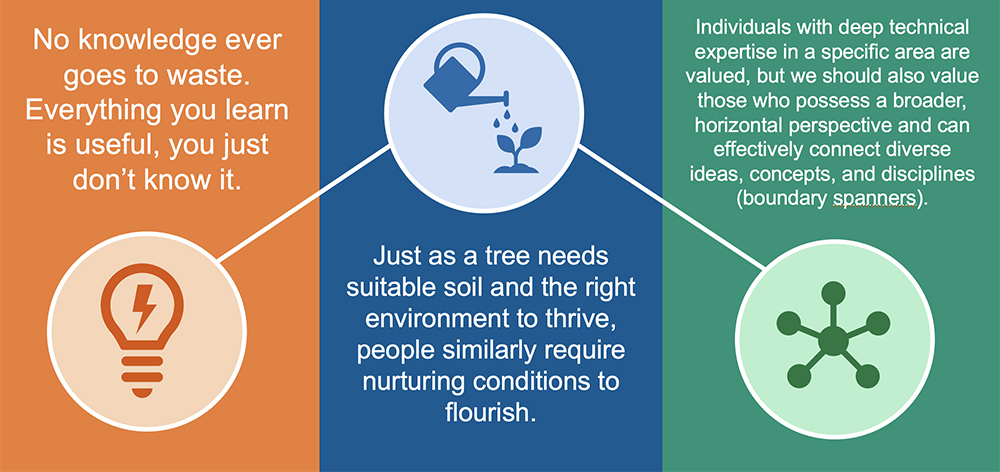

First, every piece of knowledge is valuable, though its utility may not be immediately apparent. This lesson was underscored during my internship at Toyota, where I was introduced to the principles of Kaizen ("continuous improvement") and Kanban ("visual signs") used in inventory management and scheduling. These methodologies, rooted in Japanese management processes, have significantly influenced Agile software development processes that my team uses now. This experience taught me that no knowledge ever goes to waste, a principle that I have held onto throughout my career.

Second, finding and fostering the right environment, akin to how a tree needs suitable soil and conditions to thrive, is crucial. It's essential to prioritize the right environment over titles or salary. In such nurturing conditions, growth and success naturally follow. Likewise, it's equally important to strive to create such an environment for others, enabling them to flourish. This understanding has influenced not only my personal growth but also how I interact and support those around me.

Finally, the value of being a boundary spanner cannot be overstated. In a world that often glorifies deep technical expertise in specific areas, the ability to possess a broader, horizontal perspective is invaluable. This involves the capacity to connect, communicate, and integrate across diverse domains, disciplines, or areas of expertise. Such a skill set is vital in a field as interdisciplinary as data science and informatics, where bridging different concepts and ideas leads to innovative solutions and advancements.

These lessons, gleaned through varied experiences, have been instrumental in shaping my approach and philosophy in leading teams and projects.

The Unchanging Problem of Scale

Earth science's scaling problem is a multifaceted challenge. The crux of the problem lies in the rapidly increasing volume of data, expanding computational capabilities, and the accelerating pace of research. This continuous evolution is not only propelling scientific discoveries at an unprecedented rate, but is also necessitating the development of new skills and collaborative approaches. Moreover, it brings to the forefront a host of new challenges in data management and governance.

However, it is crucial to recognize that scaling is not solely a data issue. While the Big Data challenges characterized by Volume, Variety, and Velocity have always been a part of scientific inquiry, the current scenario demands more than just handling large datasets. Scientists are required to filter through immense quantities of information to extract meaningful insights for their research. This task underscores the need for efficient data and information management processes that are flexible and adaptive, capable of scaling according to the changing demands of data volume and variety, and that have evolving policies and frameworks. Tackling Earth science's scaling problem requires a comprehensive approach that addresses not only the technical aspects of data management, but also the broader implications on scientific processes and ethical considerations.

The issue of managing vast quantities of data in scientific research, particularly in Earth and space science, is far from new. John Naisbitt's poignant observation in 1982, "Drowning in information and starving for knowledge," aptly captures the essence of this challenge. This sentiment was echoed in the same year by the National Research Council, which emphasized the difficulties in processing, storing, and retrieving data from space instruments for scientific use, as outlined in their report Data Management and Computation: Volume 1: Issues and Recommendations.1

The advent of the computer revolution in the early 1980s marked an inflection point in science, transforming how we approach complex questions about our universe. The influence of computers was particularly profound in science, where they radically changed the very nature of scientific inquiry. NASA, a key player in this arena, evolved into a knowledge agency.2 The enduring legacy of missions like the Mars Surveyor, Hubble, and the Moderate Resolution Imaging Spectroradiometer lies not in their operational lifespans, but in the valuable data they have collected.2 These datasets have revolutionized fields such as astrophysics, solar system exploration, space plasma physics, and Earth science.

The expansion of NASA's scope from mission planning and execution to include data collection, preservation, and dissemination has presented new challenges. As the collection of observations grew, there was an increasing need for tools and methodologies capable of accessing, analyzing, and mining data. This involves recognizing patterns, performing cross-correlations, and combining observations of different types, scalable to billions of objects. The 2002 report by the Space Studies Board of the National Research Council, Assessment of the Usefulness and Availability of NASA’s Earth and Space Science Mission Data, further highlights these challenges. It calls for collaboration between scientists, the information-technology community, and NASA to develop new tools and methodologies that can handle the ever-increasing complexity and volume of data.2 This historical perspective underscores the ever ongoing and evolving challenge of transforming petabytes of data into actionable insights.

The ultimate objective, as emphasized in the 2002 report by the National Research Council's Space Studies Board, Assessment of the Usefulness and Availability of NASA’s Earth and Space Science Mission Data, is clear: The generation of knowledge should be the primary goal guiding the design and budget allocations of each mission.2 This principle places a significant responsibility on us, the data science and informatics community.

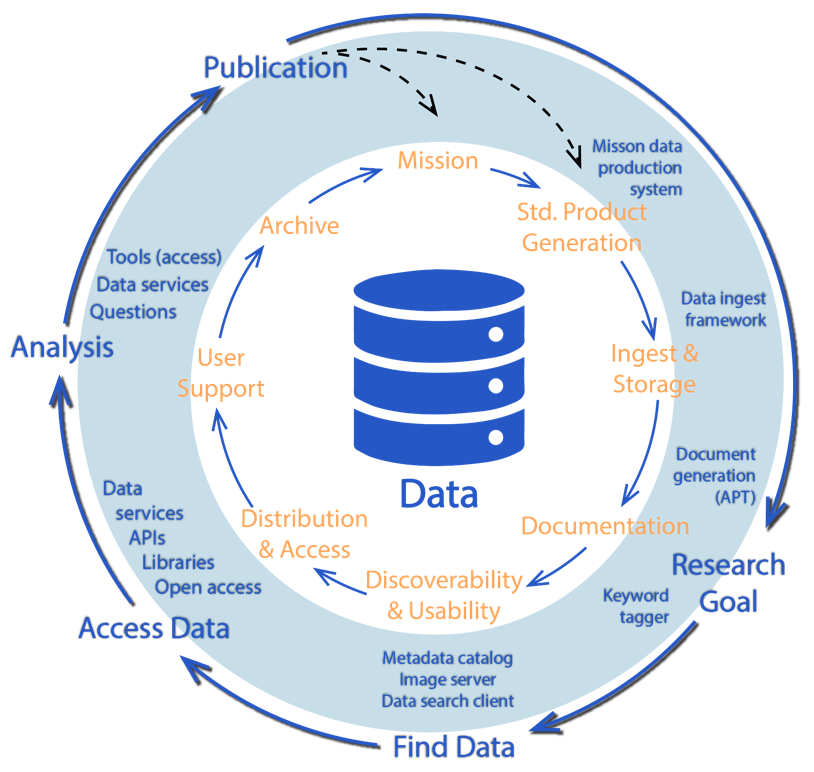

Our primary task is to manage the science data life cycle effectively, especially in light of the scaling problems presented by the ever-increasing volumes of data. This involves not only handling the data but also ensuring that the entire process, from collection to analysis, is efficient, effective, and adaptable to the evolving landscape of scientific research. Additionally, we are tasked with supporting the research life cycle in innovative ways, enabling researchers to derive meaningful insights from large data archives. This support is crucial in transforming the vast amounts of data into actionable knowledge.

To meet these challenges, it is essential to recognize that the evolution of data and the research life cycle is not a one-time effort but a continuous process. It requires ongoing adaptation, improvement, and innovation. As data volumes grow and scientific questions become more complex, the strategies and tools we develop must be scalable and flexible enough to accommodate these changes. The onus is on us, as members of the Data Science and Informatics community, to not only keep pace with these changes, but to anticipate and shape the future of scientific data management and analysis.

The traditional view of the data and research life cycle highlights the interplay between various stages of data management and the broader context of scientific research. At the core of this perspective is the data life cycle, encompassing all the stages through which data pass, from initial generation to their eventual archiving or disposal. This data life cycle, in conjunction with data/information systems and infrastructure, forms the foundational support for the research life cycle.

Central to this relationship is a perpetual feedback loop where technology and research continually drive and inform each other. Technological advancements open up new avenues for research, while findings from research, in turn, fuel technological innovations. This dynamic interaction is a key driver in the evolution of both data management and scientific inquiry.

Looking at the research life cycle, the development of new instruments and algorithms leads to the generation of more data, necessitating increasingly complex analysis methods. This growth in data volume and complexity feeds back into the data life cycle, where it influences governance, data management processes, and the development of new tools and infrastructure. These tools are essential for supporting new ways of data discovery, access, visualization, and analysis.

However, finding the right balance between the complexity and cost of the data life cycle and the demands of the research life cycle is crucial. Overly complex systems or skewed priorities can hinder progress and efficiency. The goal is to maintain a system that is both robust and adaptable, capable of evolving in response to new challenges and opportunities. This requires a readiness to quickly adopt and infuse new technologies, ensuring that both the data and research life cycles are not only sustained, but also continually optimized to advance scientific knowledge and understanding.

AI—A New Inflection Point

The advent of Artificial Intelligence (AI) marks a new inflection point, significantly impacting both the data and research life cycles. This impact is particularly evident in the concept of AI foundation models (FM), a development that is reshaping the landscape of data management and scientific research.

Foundation models are large-scale, pre-trained models that serve as a versatile base for various specific tasks. These models, exemplified by large language models (LLMs) such as GPT-x, BERT, and other large transformer models, are trained on massive datasets. This general-purpose training is then fine-tuned for specific tasks or domains using smaller, task-specific datasets. The key advantage of these models is their ability to leverage the vast knowledge captured during their pre-training phase. This allows them to achieve high performance across a wide range of tasks with minimal task-specific data input. Effectively, these models encode patterns, relationships, and knowledge from their pre-training data into their mathematical structure. The benefits of foundation models are multifaceted. A single model can be adapted to build a variety of applications, reducing downstream costs associated with computation and the labor required to build large training datasets. This flexibility and efficiency make foundation models particularly valuable in the context of the data and research life cycles.

However, foundation models are complex and require significant resources. Large datasets and considerable computational power are essential for training and fine-tuning these LLMs and other foundation models. This requirement highlights the need for substantial investment in computation, storage, and expertise.

The integration of AI FMs in the data and research life cycles represents a significant shift, particularly in how we approach both the upstream and downstream aspects of these cycles. In the upstream phase, LLMs, a type of FM, can play a transformative role in the data life cycle, especially when tailored for science-specific applications. In the downstream phase, FMs built on scientific datasets can substantially augment data analysis at scale. These models can help analyze large volumes of scientific data more efficiently than traditional methods, providing valuable insights and aiding in the discovery process.

It is also important to recognize that having advanced models alone is not sufficient. A robust infrastructure is essential to enable the scientific community to develop AI-enabled applications.

Use of Large Language Models

Large language models have significantly advanced our ability to computationally understand and generate human language, opening up a spectrum of applications across various domains. However, these capabilities are not without their limitations, necessitating specific techniques to optimize their effectiveness.

The effectiveness of LLMs is greatly dependent on the art of prompt engineering, which is crafting prompts that lead to desired outputs. The goals of prompt engineering include ensuring clarity, relevance, and creativity in prompts. Various prompting methods like example answer instances, different learning types (zero-shot, one-shot, few-shot learning), and types of prompts (prefix, cloze, anticipatory, chain of thought, heuristic, and ensemble prompts) are used to guide LLMs in generating more accurate and creative responses.

LLMs are heavily reliant on extensive datasets for pre-training and it may be challenging to adapt LLMs to new or untrained data. This limitation poses a barrier to their broader application. Furthermore, LLMs sometimes generate plausible but incorrect information, a phenomenon often referred to as "hallucination." This issue highlights the need for accuracy-enhancing techniques.

To address these accuracy issues, several methods are employed. Context appending involves incorporating additional relevant information into the input to provide more context to the model, aiding in more accurate responses. The chain-of-thought (CoT) technique guides the model to construct answers step-by-step, thereby enhancing the logical flow and accuracy of the responses. Similarly, the use of self-consistency ensures that the model's responses are internally consistent, further enhancing reliability.

Fine-tuning LLMs with new data can also be used for overcoming their inherent limitations and for tailoring them to specific tasks. Another emerging strategy is the use of the retrieval augmented generation (RAG) service, which enhances LLMs by combining them with information databases. This approach is particularly beneficial in fields like data governance, management, search/discovery, access, and analysis.

The RAG model significantly enhances text generation by first retrieving and integrating relevant information, thereby improving the quality and reliability of the generated text. In this model, embeddings and vector databases play a crucial role in facilitating accurate text generation and information retrieval.

LLMs have been applied in various tasks, including governance/policy through tools like Data Governance Framework Compliance Checker, data/information curation for NASA's Airborne Data Management Group (ADMG) Project, data access using natural language queries of NASA's Fire Information for Resource Management System (FIRMS) database, and data management through Global Change Master Directory Keyword. These applications underscore their potential in enhancing the efficiency and effectiveness of processes in these areas. These applications emphasize the transformative potential of LLMs in various aspects of data and research life cycles.

AI Foundation Models Built from Science Data

In the same vein, the construction of AI FM models pre-trained specifically on high value science data marks a significant step forward in this direction. Our prototype effort in collaboration with IBM Research, the Harmonized Landsat and Sentinel-2 (HLS) Geospatial FM, Prithvi,3 exemplifies how FMs can address the complexities and limitations associated with traditional AI models in scientific research.

Traditional AI models often face challenges due to the increasing scale of data and model sizes. They typically require extensive human-labeled data and are limited to specific tasks. FMs, in contrast, use large volumes of unlabeled data for initial pre-training, reducing reliance on human-labeled datasets. This approach is more cost-effective and versatile, allowing the models to adapt to specific tasks with less labeled data.

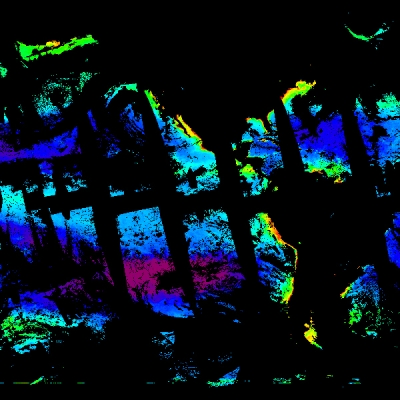

A critical aspect of designing a GeoFM like Prithvi involves handling imperfect remote sensing data, such as removing noise and avoiding redundant information. Prithvi utilizes a masked autoencoder (MAE) architecture, a self-supervised learning model adept at reconstructing partially masked images. It incorporates an asymmetric encoder-decoder setup, where the encoder processes visible image patches, and the decoder reconstructs the masked areas. To cater to the specific requirements of satellite imagery, Prithvi includes adaptations like 3D positional and patch embeddings, acknowledging the spatiotemporal attributes of geospatial data.

Prithvi was evaluated using three different use cases. In the Multi-Temporal Cloud Gap Imputation, Prithvi was fine-tuned for filling gaps in satellite imagery caused by cloud coverage. Prithvi demonstrated superior performance compared to a CGAN baseline model, even with less training data. In a flood mapping use case, Prithvi was utilized in identifying flood-affected areas from satellite imagery. In this case, Prithvi outperformed standard transformer architectures and baseline models, showing effectiveness over extended training periods. Finally, in a wildfire scar mapping use case, Prithvi excelled in segmenting wildfire scars in satellite imagery, showcasing its robustness and efficiency over other models.

Prithvi's development and application in various use cases underscore the potential of FMs in revolutionizing AI's role in scientific analysis. By overcoming the constraints of traditional AI models and efficiently adapting to specific tasks, FMs like Prithvi represent a promising future for AI in scientific data processing and analysis.

The prototyping of the Prithvi FM for geospatial analysis has yielded valuable insights, particularly concerning the model's performance and its distinct advantages in certain scenarios. While these models may not outperform all state-of-the-art models in every application, they exhibit unique strengths, especially in contexts with limited labeled data. Prithvi benefits significantly from extensive self-supervised pre-training. This approach not only enhances its accuracy but also accelerates the speed of fine-tuning it for specific tasks. The large-scale pre-training equips the model with a robust foundational knowledge base that can be effectively applied and adapted to various tasks.

One of the most notable strengths of Prithvi is its data efficiency. The model is capable of achieving commendable performance levels with relatively lesser data. This feature is particularly crucial in geospatial tasks where acquiring extensive labeled datasets can be both challenging and costly. Prithvi has shown an impressive ability to generalize across different resolutions and geographic regions. This characteristic is vital for a model intended for global-scale applications, as it ensures consistency and reliability of the model's performance irrespective of geographical variances.

The conscious decision to provide open access to the Prithvi project's code base, model architecture, pretrained weights, and workflow is both our commitment to open science and a strategic one to propel the domain of AI within geoscience and remote sensing. This act of making these key resources available to the public serves to enrich the wider scientific community, enabling enhanced research, innovation, and the development of new applications. This approach of open accessibility not only fosters a culture of transparency, but also spurs collaborative efforts and collective advancements in the field. We envision this approach will pave the way for the creation of more sophisticated and targeted AI tools in Earth science.

Robust Infrastructure for AI Foundation Models

The true potential of AI FMs can only be reached if there is a robust infrastructure that supports them. This infrastructure is pivotal for the scientific community to effectively develop and implement AI-enabled applications. To cater to the complexities of scientific datasets and the demanding computational requirements of FMs, this infrastructure must be comprehensive.

The AI technology stack is envisioned as a multi-layered framework, each layer contributing uniquely to the functionality of AI models. At the top is the application layer, where end-users engage, either by operating model pipelines through end-to-end (E2E) applications or by utilizing third-party APIs for FM AI models. Next is the model layer, a diverse repository of AI models accessible via open-source checkpoints. This layer hosts both LLMs as well as science specific FMs such as Prithvi, with model hubs playing a pivotal role in the distribution and sharing of these FMs. The infrastructure layer forms the backbone of the stack, comprising platforms and hardware elements, notably cloud platforms and/or high end compute, tasked with executing the training and inference activities for AI models. Lastly, the orchestration and monitoring layer is crucial for managing the deployment, comprehension, and security of AI models, ensuring they operate both efficiently and securely.

For effective use of LLMs, the RAG component also needs to be part of the AI stack. Central to this component is the efficient management and use of data vectors in vector databases and the role of embeddings in LLMs. These embeddings, representing text as fixed-size numerical arrays, are pivotal in tasks like semantic search and question answering, aiding in more accurate text generation and information retrieval. The component also provides a data processing pipeline that encompasses collecting diverse but highly-curated source data, chunking it into smaller segments, transforming these into vector representations, and building a vector database for storage. It then retrieves relevant data segments for text generation, effectively addressing long-term memory challenges in LLMs. Vector databases in the RAG model facilitate this process through indexing for faster searches, querying for nearest neighbors, and post-processing to retrieve and refine the final data, thereby significantly bolstering the efficiency and functionality of LLMs.

All these components in the AI infrastructure stack are crucial for facilitating the development and deployment of AI-driven scientific applications. We envision a future where this infrastructure stack is provided as a tailored platform for science, encompassing science-specific FMs, evaluation suites, and benchmarks made available to the community to effectively utilize this technology. The roadmap to this future also emphasizes developing tutorials for leveraging the platform effectively, selecting appropriate models for tasks, and deploying applications, as well as developing playbooks for building FMs for high-value science data.

The Need for Collaboration

The necessity for collaboration in AI for science stems from the inherent complexity of scientific problems and the vastness of data involved. These intricate challenges demand a multidisciplinary approach, as no single research group or institution possesses the complete spectrum of resources and expertise needed to effectively develop FMs. The diversity and volume of scientific data call for varied expertise, ensuring a comprehensive understanding and innovative solutions. This collaborative model is essential, especially in AI, where the development of versatile FMs requires insights from various AI subfields. Furthermore, pooling resources such as labeled datasets and benchmarks across different groups enhances the validation and applicability of these models, making them suitable for a wide range of applications.

Our approach advocates for the inclusion of diverse groups to ensure a broad spectrum of perspectives in scientific research. This involves engaging key stakeholders: dedicated science experts advancing knowledge in their fields, universities and research organizations providing necessary infrastructure and support, and tech companies offering essential technological solutions and resources. Such a collaborative environment not only fuels innovation but also ensures that the developed solutions are robust and well-aligned with the current scientific challenges. By integrating interdisciplinary expertise with the support of various stakeholders, we can drive the frontiers of science and AI forward in a cohesive and impactful manner.

AI Grounded in Open Science Principles

We have to acknowledge AI’s presence and lean into its potential. To cultivate trustworthiness, we should commit to transparency in governance, thereby allaying fears within the scientific community. This commitment involves adopting open models, workflows, data, code, and validation techniques, and being transparent about AI's role in applications. Building user trust in AI is crucial, and is achievable through providing factual answers, attributing sources accurately, and striving to minimize bias.

Moreover, fostering community involvement is key; we need to encourage collaboration across organizations and share resources to define valid AI use cases and benchmarks. Educating users about responsible AI interaction is another essential step. This can be done through workshops, comprehensive documentation, and enhanced engineering skills that help users understand the strengths and weaknesses of AI models.

Finally, it's vital to continuously monitor and assess emerging AI techniques, like RAG or constitutional AI. This continuous evaluation and assessment ensures that AI remains trustworthy and reliable. The development and use of AI must be grounded in open science principles, thereby reinforcing its alignment with open and responsible scientific inquiry.

Closing Thoughts

A quote from the 2002 assessment by the National Research Council poignantly captures the essence of our scientific endeavors: "Long after the operational cessation of iconic missions like the Mars Surveyor, Hubble, and others, their most enduring legacy is the wealth of data they have amassed. This data, a repository of invaluable insights, holds the potential for continued exploration and discovery, transcending the operational lifespan of the instruments that collected it."

This quote aptly captures the immense potential for more effective utilization of the data we have. In the context of streamlined data management and governance, there lies an opportunity to significantly enhance the value and utility of these data. Achieving this will require a paradigm shift in our approach towards tools, processes, and policies, along with the evolving roles of individuals in the field of informatics. This shift is not just a technical challenge but also a conceptual one, demanding new ways of thinking and operating.

Incorporating AI into this landscape amplifies these possibilities. AI has the potential to profoundly enhance the utilization of our existing data, offering innovative ways for managing and governing these data more efficiently and effectively. However, this integration is not just about leveraging advanced technology; it also necessitates a forward-thinking perspective on the evolving role of informatics and its impact on the future of scientific inquiry.

We know that new technology often reshapes our activities, making tasks cheaper and easier. This change might manifest as doing the same with fewer people or accomplishing much more with the same number of individuals—thereby addressing the challenge of scale. It is important to remember that new technology tends to redefine what we do. Initially, we attempt to fit new tools into old ways of working, but over time it becomes apparent that our methods and processes need to adapt to accommodate the capabilities of these new tools.

For those of us working in this space, this serves as a great reminder of our fundamental role in shaping the way science is conducted. While the adoption of new methodologies and technologies takes time, the impact we have is profound and enduring. We are not merely building and adopting new tools; we are active participants in the evolution of scientific inquiry, redefining what is possible in our quest for knowledge and understanding.

References

1. National Research Council (1982). Data Management and Computation: Volume 1: Issues and Recommendations. Washington, D.C.: The National Academies Press. doi:10.17226/19537

2. Task Group on the Usefulness and Availability of NASA’s Space Mission Data, Space Studies Board, National Academies of Sciences, Engineering, and Medicine (2002). Assessment of the Usefulness and Availability of NASA's Earth and Space Science Mission Data. Washington, D.C.: The National Academies Press. doi:10.17226/10363

3. Jakubik, J., et al. (2023). Foundation Models for Generalist Geospatial Artificial Intelligence. arXiv preprint arXiv:2310.18660. doi:10.48550/arXiv.2310.18660